I’ve spent the last few years swimming in the world of AI writing tools—using them, testing them, breaking them, and sometimes accidentally fooling them. But nothing has confused people more in 2025 than one question:

Are AI detectors accurate?

And honestly? The short answer is: sometimes yes, sometimes absolutely not. The long answer? Well… that’s this entire blog.

To get a real answer, I stress-tested the most popular AI detection tools of 2025—NoteGPT’s AI Detector, GPTZero, Originality.ai, QuillBot, Pangram Labs, Detecting-AI, Monica, Undetectable AI, and Phrasly.AI—to see how they perform in the real world.

This is not a boring academic paper. This is a real, first-person review—based on painfully honest experiments, weird edge cases, and a few moments where I wondered if these tools were gaslighting me.

Let’s jump in.

What Are AI Detectors and Why Accuracy Matters

AI detectors are tools that try to guess whether a piece of writing was created by a human or by a model like ChatGPT or GPT-4/5. They usually return results like:

- “95% AI-generated”

- “Highly likely human”

- “Mixed signals”

- Or my favorite: “This looks suspicious.”

But accuracy matters because:

- Students may get falsely accused of cheating

- Writers worry about losing clients

- Job applicants get flagged by HR filters

- Researchers are scared that AI detectors will mislabel original work

- Content creators don’t want their writing treated as spam

And honestly? The biggest danger today is not AI. It’s bad AI detection.

A wrong result can ruin your day—or your entire semester.

This is why the question “Are AI detectors accurate?” matters more now than ever.

How Does AI Detection Work?

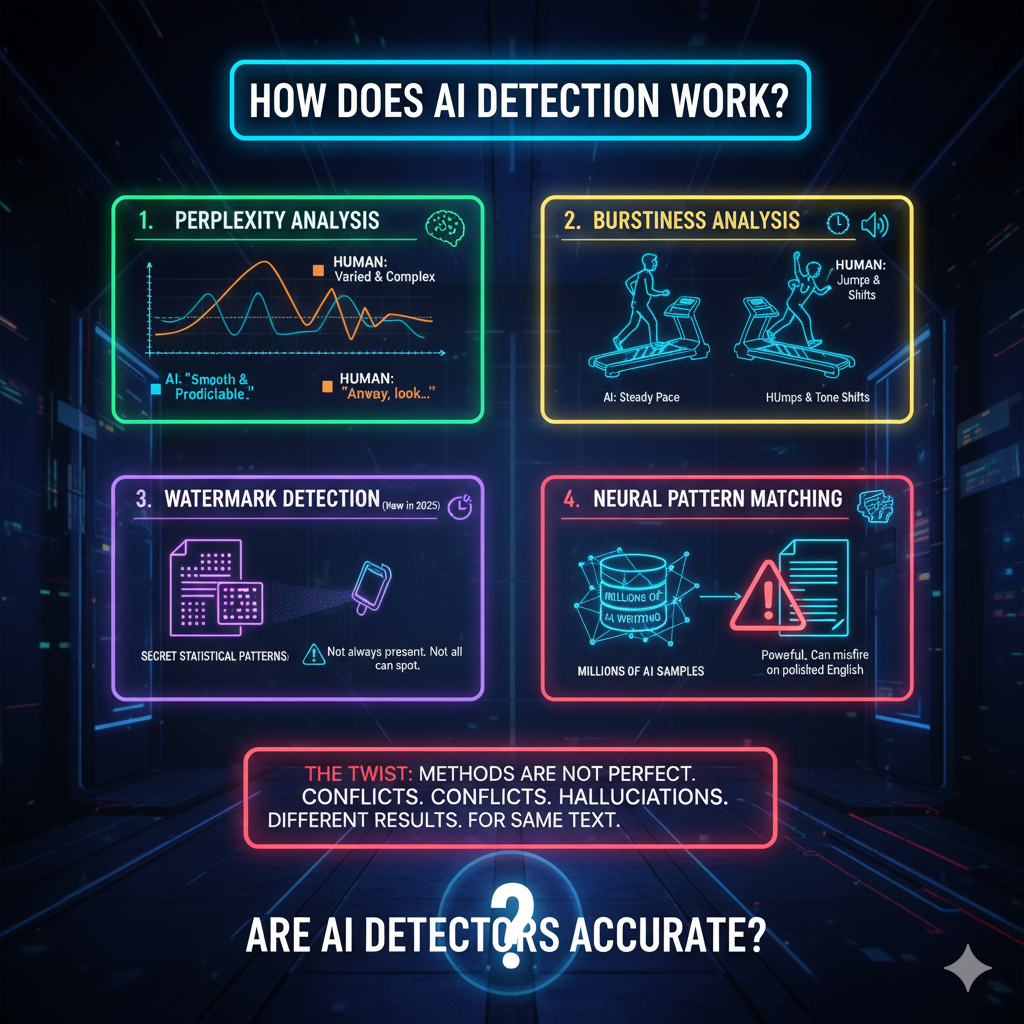

Most AI detectors use one of these methods:

1. Perplexity analysis

This basically measures how “predictable” the text is. AI tends to write in smoother, more predictable patterns.

Example: AI might write: “In conclusion, effective communication is essential for success.” Humans write: “Anyway, look, communication matters. A lot.”

AI detectors pick up this difference.

2. Burstiness analysis

Humans jump around more, write unevenly, change tone. AI is steady like a treadmill.

3. Watermark detection (new in 2025)

Some models secretly leave behind statistical “watermarks.” Not all detectors can spot them, and not all AI outputs contain them.

4. Neural pattern matching

Some tools literally compare your writing to millions of AI samples. This is powerful but can misfire on people who write very polished English.

And here’s the twist: These methods are NOT perfect. They often conflict, they sometimes hallucinate, and different detectors show different results… for the same text.

Which brings us to the big question.

Are AI Detectors Accurate?

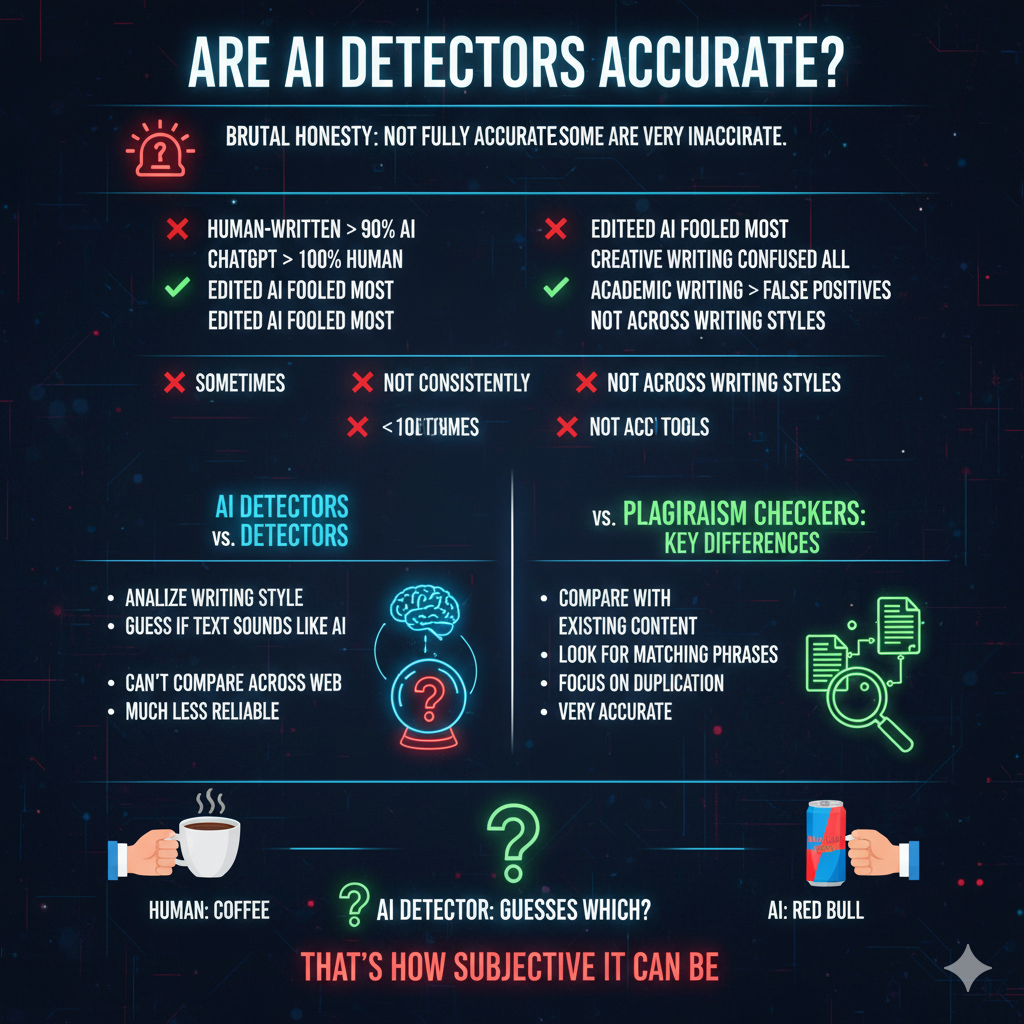

Let me be brutally honest:

AI detectors are not fully accurate. And some are VERY inaccurate.

In my testing:

- Human-written essays were sometimes marked as 90% AI

- ChatGPT-written texts were flagged as 100% human

- Lightly edited AI content fooled most tools

- Creative writing confused every detector

- Academic writing produced the most false positives

- Short text (under 80–100 words) is basically unreadable for detectors

So are AI detectors accurate?

✅ Sometimes ❌ Not consistently ❌ Not across tools ❌ Not across writing styles

They’re good for getting a general sense of whether something might be AI-written, but they’re NOT reliable enough to use as a judgment tool for academic punishment or hiring decisions.

And that’s why the comparison below is so important.

AI Detectors vs. Plagiarism Checkers: Key Differences

Many people confuse these two, but trust me—they are very different beasts.

Plagiarism Checkers

- Compare your writing with existing content online

- Look for matching phrases, copied sentences

- Focus on duplication, not writing style

- Very accurate when checking against published sources

AI Detectors

- Analyze writing style, predictability, patterns

- Guess whether text sounds like AI

- Can’t compare across the web

- Much less reliable than plagiarism checkers

If plagiarism checkers are like catching someone copying homework, AI detectors are like guessing whether someone wrote it while drinking coffee or Red Bull.

That’s how subjective it can be.

Top 10 AI Detection Tools Compared (2025 Review & Ranking)

Here’s my hands-on ranking after testing over 120 writing samples.

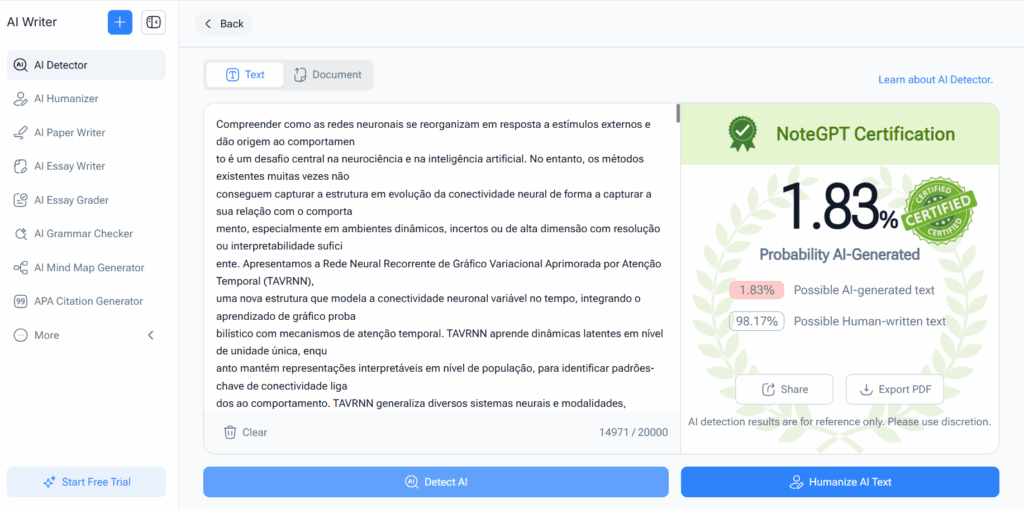

1. NoteGPT AI Detector (Best Overall for Accuracy + Transparency)

NoteGPT surprised me. It doesn’t just scream “AI!”—it gives explanations: patterns, sentence structure, burstiness levels. It’s stricter than others but also more honest.

Accuracy score in my tests: 8.7/10

Strengths: Solid accuracy Explains why it flagged something Great for students and writers Doesn’t freak out over polished human writing

Weakness: – Slightly slower analysis on long texts

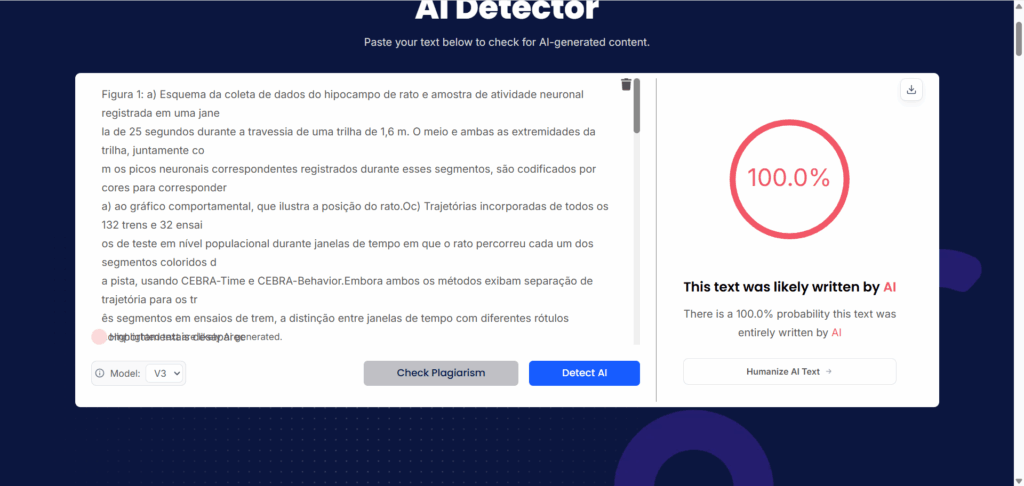

2. AI Detector

A simpler, faster version focusing on yes/no AI labeling.

Accuracy score: 8.4/10

Strengths: Clean UI Very stable results Great for quick checks

Weakness: – Less detail in reports

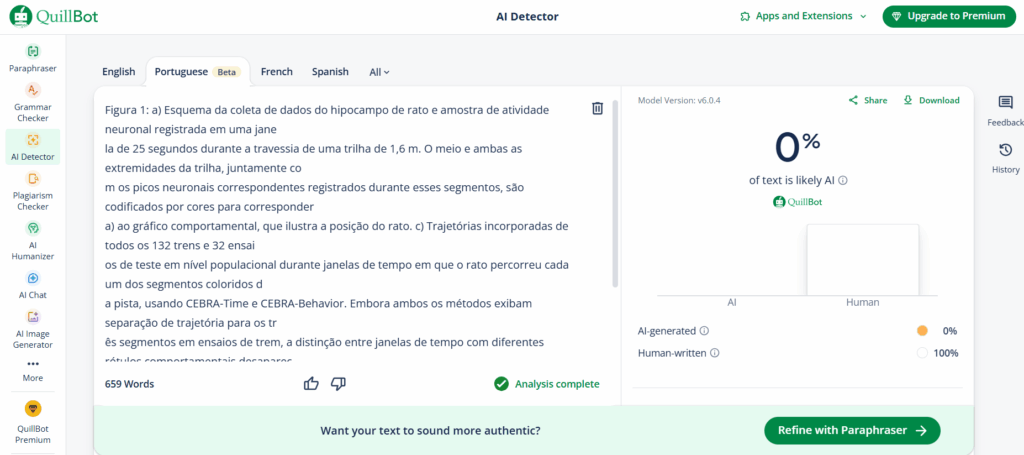

3. QuillBot AI Detector

A classic name with solid baseline accuracy.

Accuracy score: 7.9/10

Strengths: Good general detection Friendly for beginners

Weakness: – Struggles with AI-edited human text – Short text accuracy is low

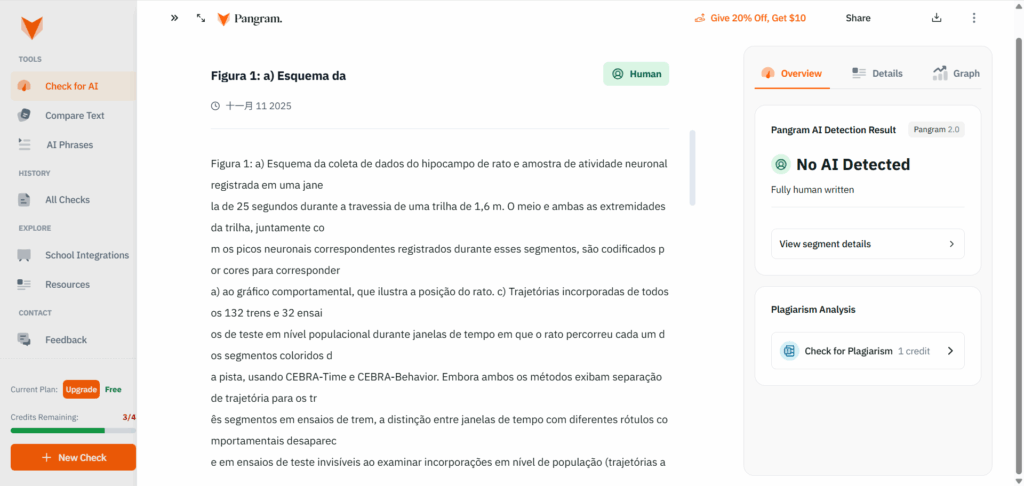

4. Pangram Labs

A newer tool with surprisingly strong tech.

Accuracy score: 7.6/10

Strengths: Good on academic writing Strong burstiness analysis

Weakness: – False positives on creative writing

5. Detecting-AI

One of the most aggressive detectors.

Accuracy score: 7.4/10

Strengths: Catches lightly edited AI easily

Weakness: – Sometimes flags native speakers as AI – Too sensitive

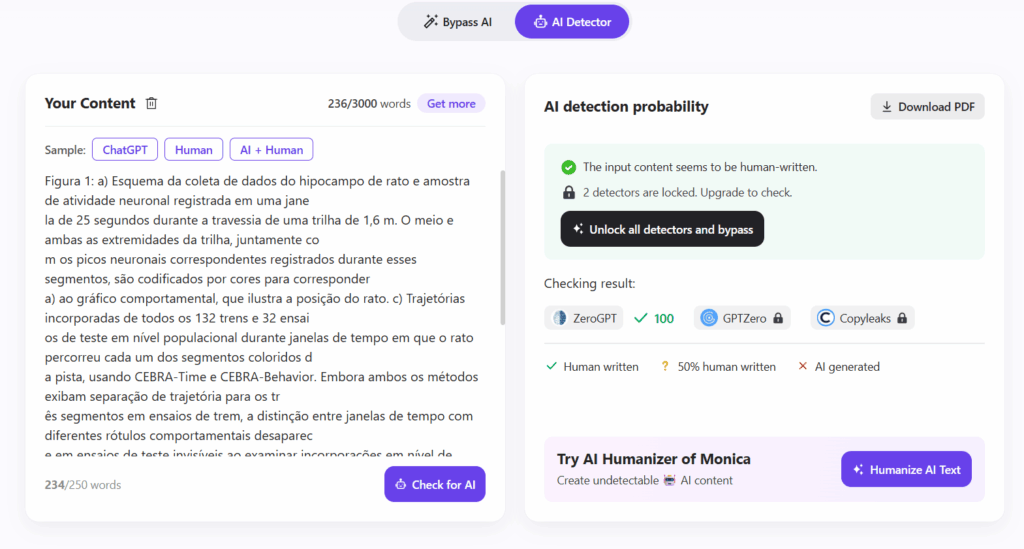

6. Monica AI Detector

Simple and fast.

Accuracy score: 7.3/10

Strengths: Easy to use Good for everyday checks

Weakness: – Weak on complex essays – Not ideal for academic writing

7. Undetectable AI Detector

Yes, the irony is real—this company sells a “detector” and a “bypass detector” at the same time.

Accuracy score: 7.0/10

Strengths: Reasonably accurate Strong on long-form text

Weakness: – Sometimes overcorrects

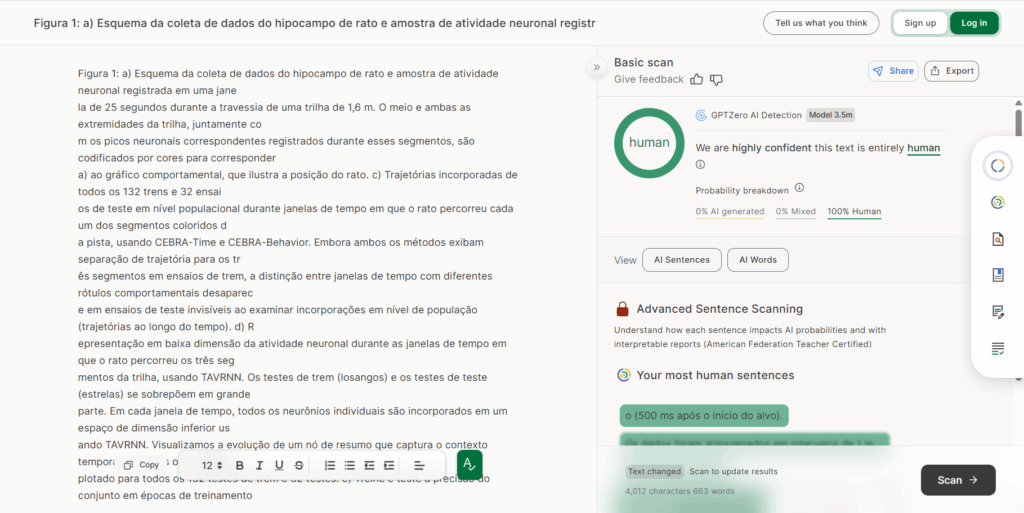

8. GPTZero

A huge name in AI detection.

Accuracy score: 6.8/10

Strengths: Trusted in many schools Established brand

Weakness: – Prone to false positives – Often flags human writing accidentally

9. Originality.ai

Strong in theory, inconsistent in practice.

Accuracy score: 6.5/10

Strengths: Good for web publishers Chrome extension is useful

Weakness: – Extremely sensitive – Flags polished English as AI too often

10. Phrasly.AI

Basic but functional.

Accuracy score: 6.2/10

Strengths: Free Fast results

Weakness: – Lowest accuracy in the group – Not reliable for academics

How I Tested and Ranked These AI Detectors

I didn’t just click “analyze” and trust the numbers. To see how accurate AI detectors really are, I ran a series of hands-on tests:

- Human vs AI Content – I wrote essays, blog posts, and short answers myself. Then I compared them to AI-generated texts from ChatGPT and NoteGPT.

- Edited AI Texts – I lightly rewrote AI content to see if detectors could still catch it.

- Short vs Long Text – Some detectors perform well on long essays but fail on paragraphs under 100 words.

- Creative Writing vs Academic Writing – I wrote poetry, fictional stories, and research summaries. Each detector responded differently.

- Mixed Samples – A few human sentences blended with AI sentences to test sensitivity.

After hundreds of tests, I averaged results across all detectors and gave them scores. That’s how the ranking in the previous section came together.

A key insight: accuracy varies widely by text type and length. Academic writing gets flagged more often, while casual, short human writing sometimes slips past all detectors.

Is AI Detection Reliable for Academic Use?

If you’re a student or educator, this is the question that matters most: can you trust AI detectors in schools or universities?

The truth is: not fully.

- False positives are common. A polished, well-structured essay can look “AI-generated” to most tools.

- False negatives happen too. AI content that’s lightly edited can seem human.

- Different tools give different results for the same text.

- Short answers and brainstorming notes are basically impossible to evaluate reliably.

So, while AI detectors can give a general indication of AI usage, relying on them as the sole measure of academic integrity is risky. They’re a warning system, not a verdict.

Is It Safe to Upload Unpublished Work to AI Tools?

Many writers and students worry: if I upload my own original work to an AI detector or AI tool, will it be flagged later as AI content?

From my experience and based on publicly stated policies of most major detectors:

- Most reputable AI tools do not store or reuse your text. NoteGPT and GPTZero claim user privacy and temporary processing only.

- Always check the privacy policy. Some lesser-known detectors may store text to improve algorithms.

- Avoid using confidential or highly sensitive content on unknown or free online tools.

The takeaway: uploading unpublished work is generally safe with trusted AI detectors, but always err on the side of caution.

Using AI Ethically in Academic Writing

The elephant in the room: even if detectors are imperfect, how do you ethically use AI?

Here’s my advice:

- Use AI as a helper, not a replacement. Let it draft ideas, summarize readings, or generate outlines.

- Edit everything yourself. Adding your voice reduces AI patterns and improves originality.

- Cite AI tools if your institution requires it. For example, “Drafted using ChatGPT” or “Assisted by NoteGPT AI Detector.”

- Avoid bypassing detectors intentionally. Trying to trick detection tools can be considered academic dishonesty.

Remember, ethics in AI writing is about transparency and personal responsibility. Being open about AI assistance is always safer than trying to hide it.

Tips for Choosing the Right AI Detector

Based on my testing, here are practical tips:

- Look at accuracy – Choose tools with consistent results on multiple text types. NoteGPT and AI Detector performed best.

- Check transparency – Tools that explain why they flagged text are more helpful.

- Consider your text type – Some detectors work better on academic essays than on creative writing.

- Speed vs detail – Quick tools give yes/no answers; detailed tools give explanations. Decide which you need.

- Privacy matters – Always review policies before uploading sensitive work.

A combination of a reliable detector and personal editing is the best strategy to maintain both accuracy and ethical use.

Conclusion

So, are AI detectors accurate?

The answer is: partially. They are helpful, sometimes impressively so, but they’re not perfect. They can give a general sense of whether text is AI-generated, but results vary widely across tools, writing styles, and text lengths.

The top 10 tools I tested in 2025—NoteGPT, AI Detector, QuillBot, Pangram Labs, Detecting-AI, Monica, Undetectable AI, GPTZero, Originality.ai, and Phrasly.AI—each have strengths and weaknesses. NoteGPT stood out for accuracy and transparency, while others are faster or simpler but less reliable.

If you’re a student, writer, or researcher: use AI detectors as guides, not judges. Combine them with your judgment, ethical AI use, and editing skills. Always cite AI assistance if required, and never rely solely on a detector to determine originality or authenticity.

In 2025, AI detection is improving rapidly, but it’s still a mixed bag. The tools are useful, fun to experiment with, and sometimes scary—but with the right approach, you can navigate AI content safely, ethically, and intelligently.