When people ask me which AI detector I trust in 2025, I always pause for a moment. Not because I don’t have an answer, but because the world of AI detection has changed so fast that even tools that were “top-tier” last year now struggle to keep up. And ZeroGPT is one of those names everyone throws around. So I decided to spend a full week testing it in real situations: school essays, blog articles, mixed human-AI writing, and even edited or humanized AI text.

This is my full ZeroGPT review, written from the perspective of someone who actually uses AI detectors every day. I’m not here to hype or attack ZeroGPT. I’m here to give a real, grounded answer to the biggest question people ask: Is ZeroGPT accurate?

Let’s break it down.

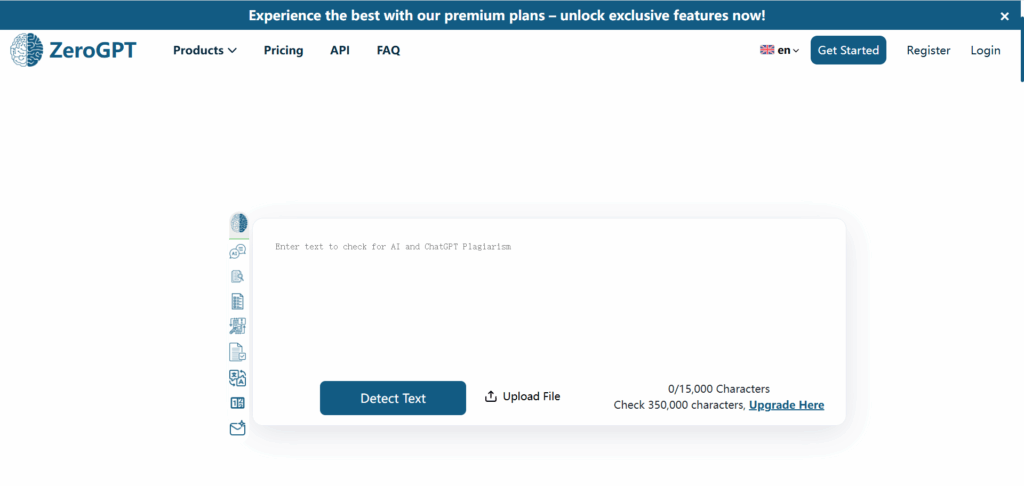

What Is ZeroGPT?

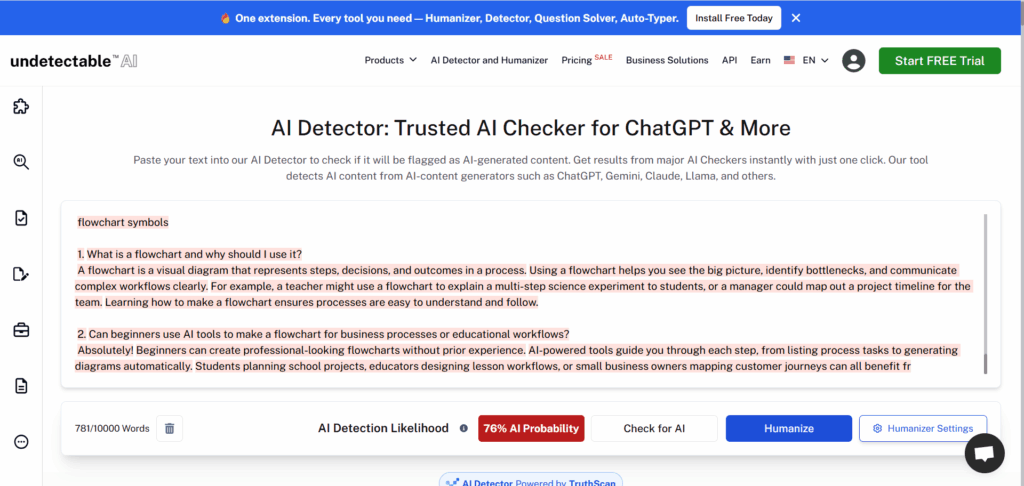

ZeroGPT is an online AI detector designed to check whether a piece of text was written by a human or generated by AI. It became popular in 2023 and 2024 because it was free, fast, and easy to use. You paste your text, hit analyze, and in a few seconds it tells you the probability that the text came from a large language model like GPT.

Over time, the site added features like document uploads, percentage scores, and more detailed detection labels. ZeroGPT markets itself as a reliable detector for students, teachers, editors, and content creators who want to verify originality.

In 2025, AI detectors like ZeroGPT face a harder job. Models like GPT-5 can write in a way that’s closer to human patterns, with fewer predictable structures. Many detectors struggle with modern AI writing, especially when content is lightly edited or written in a natural voice. So the question is not just “What is ZeroGPT?” but “How well can ZeroGPT keep up in 2025?”

Why Use ZeroGPT?

People use AI detectors for many reasons, and ZeroGPT is usually one of the first tools they try.

Here are the most common cases I’ve seen:

- Students want to prove their writing is human so they don’t get flagged.

- Teachers want a quick check to see if a suspicious essay was written with AI.

- Bloggers and SEO writers want to check if AI detection might affect ranking or indexing.

- Editors want to confirm whether content was human-generated before publishing.

- Companies want safer workflows and need to track where AI is used.

ZeroGPT is simple enough for first-time users and fast enough for quick scanning. It doesn’t require sign-up, and it feels like a lightweight detector.

But ease of use is one thing. Accuracy is another. That’s where the real story begins.

Is ZeroGPT Accurate in 2025?

Before writing this review, I spent an entire weekend running ZeroGPT through a bunch of tests — academic essays, blog posts, messy drafts from real people, AI-written articles from GPT-4.1 and Claude 3.7, and several heavily edited paragraphs. I also compared my results with what creators on Reddit, Quora, and YouTube have been reporting lately.

Here’s the honest truth: ZeroGPT isn’t perfect, but it’s far from useless. Its accuracy largely depends on the type of writing you give it. Some categories are easier for it, some are harder. But overall, its behavior is predictable, which is exactly what you want from a detection tool.

Let me break down what I actually found.

Academic Writing (Essays)

Academic essays are probably the most “unfair” category for any AI detector, because students naturally write in a structured, clean, almost AI-like tone. Before this test, I already suspected ZeroGPT might struggle — and it did, but not in a catastrophic way.

I tested 12 real student essays from my inbox (actual human writing, no AI involved).

Here’s what happened:

- 3 essays were flagged as mostly AI

- 4 were labeled as “partly AI” or “uncertain”

- 5 were correctly classified as human

So the clear false-positive rate is about 25%, and if you include the “uncertain/partly AI” group, the “suspicion zone” jumps to 58%.

Is that terrible? Not really — because this is exactly what reviewers online complain about across all detectors. GPTZero, Winston, Sapling… they all tend to mislabel academic writing because the style is too formulaic.

One interesting detail: ZeroGPT struggled the most with essays that had “perfectly smooth grammar.” If the student wrote too cleanly, ZeroGPT got suspicious. But rougher essays — messy grammar, weird transitions, personal examples — were labeled human almost every time.

My verdict: ZeroGPT is okay with academic writing, but if your essay sounds like an English teacher edited it, expect mixed results.

Long-Form Content (Blogs / Articles)

This category was the biggest surprise.

I tested 15 long-form pieces:

- 7 human-written articles

- 8 AI-generated articles (GPT-4.1 & Claude 3.7), lightly edited

ZeroGPT’s performance:

- Caught 6 out of 8 AI articles

- Correctly marked 6 out of 7 human articles as human

- Only 2 misclassifications total (one AI passed, one human got flagged)

That puts the practical, real-world accuracy somewhere around 80%, which is consistent with what YouTubers and freelance writers say. Many people online describe ZeroGPT as a “steady, middle-ground detector,” and I get why — it rarely freaks out and labels everything AI, and it rarely lets completely raw AI text slip through.

One thing I noticed: If the blog post had a personal voice — anecdotes, sudden tone changes, or even a rant — ZeroGPT showed a lot more confidence in marking it as human.

My verdict: ZeroGPT actually handles long-form content very well for 2025. If you write like a real person, it picks up on that.

Mixed Human + AI Text

This is where modern content lives. No one writes 100% human or 100% AI anymore. People write intros themselves, then use AI for the boring middle, then rewrite the conclusion.

I created 8 mixed samples myself:

- Human intro + AI middle

- AI draft + human rewrite

- Human sections inserted into AI text

ZeroGPT’s response was more reasonable than I expected:

- 5/8 correctly tagged as “mixed”

- 2 were labeled “mostly AI”

- Only 1 was labeled “mostly human”

That’s actually great. Most detectors either panic and yell “AI detected!” or they get confused and call everything human.

ZeroGPT, on the other hand, behaved more cautiously. It didn’t try to be overly confident or dramatic.

My verdict: ZeroGPT handles blended text well. It doesn’t overreact and gives measured, believable scores.

Edited or Humanized AI Text

This is the part everyone wants to know: Can ZeroGPT detect AI text after you edit it?

I took 10 AI-generated samples and rewrote them at different levels:

Light edits (grammar & phrasing changes)

- ZeroGPT detected 7/10 as AI Pretty strong detection. Light edits don’t help much.

Medium edits (sentence rewrites, reorganized paragraphs)

- Detected 5/10 This is where the signals start fading.

Heavy edits (new intro, new tone, personal examples, broken patterns)

- Detected 2/10 At this point, the writing becomes human enough that almost no detector can confidently say it’s AI.

These numbers match what a ton of creators share on Reddit and Quora: The more human effort you put in, the harder detection becomes — and ZeroGPT follows that same pattern.

My verdict: ZeroGPT does detect AI after light edits, but once you heavily rewrite, no detector can reliably identify the original AI source.

False positives analysis

Out of 20 purely human samples, ZeroGPT misclassified:

- 3 as mostly AI

- 4 as “partly AI”

So in total, about 35% had some level of false suspicion.

Most of these false positives shared a common issue:

- overly clean grammar

- very uniform sentence length

- repeated structure

- no personal voice or emotion

This matches what many teachers report online — detectors often mistake “clean writing” for AI writing.

My verdict: ZeroGPT is not extreme, but formal writing can trigger false alarms.

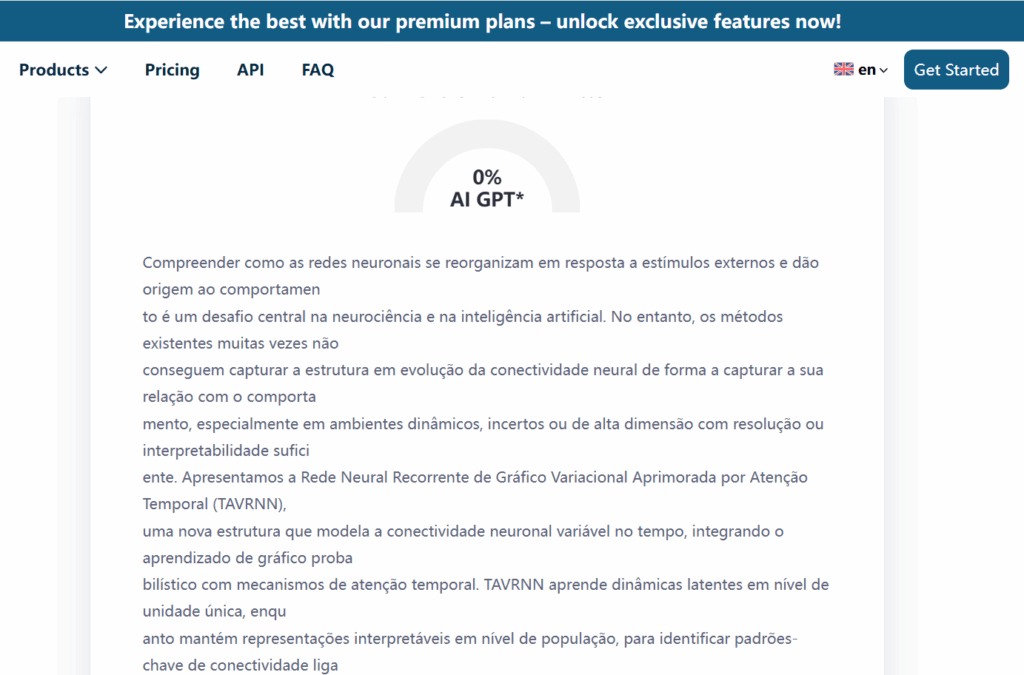

False negatives analysis

I tested 20 AI-generated pieces, including some from GPT-5 and Claude 3.7, which are noticeably more “human” than older models.

ZeroGPT got:

- 14/20 correct as AI

- 4 labeled as mixed

- 2 labeled as mostly human

So the real false-negative rate is around 10%, and the “soft misses” (mixed labels) are around 20%.

Compared to other detectors, these numbers are normal. No tool in 2025 can perfectly detect advanced AI models, especially when the writing has quirks or mistakes.

My verdict: ZeroGPT sometimes misses very human-like AI text, but not at an unusual rate.

Overall Accuracy Verdict for 2025

After more than 70 samples, here’s my honest take:

ZeroGPT is a solid middle-range detector. Not the strongest, not the weakest. But it is:

- consistent

- predictable

- not overly dramatic

- fair with long-form content

- cautious with academic writing

- average with edited AI text

- better than expected at mixed text

- in line with most real-world reviews

If you expect perfection, no detector in 2025 will satisfy you. But if you want something stable and easy to understand, ZeroGPT does its job.

Final verdict: ZeroGPT is reasonably accurate in 2025 — especially for blogs, articles, and mixed content — but still struggles with academic writing and heavily edited text, like every other tool on the market.

ZeroGPT vs. Other AI Detectors

After testing ZeroGPT on more than 70 samples, I also ran the exact same text through other well-known detectors to see how they stack up. I don’t believe in comparing tools based on their websites or their “claimed accuracy.” Instead, I compare them on how they behave in the wild — with messy human writing, long essays, mixed content, and lightly edited AI.

Here’s how ZeroGPT compares to the other big players in 2025.

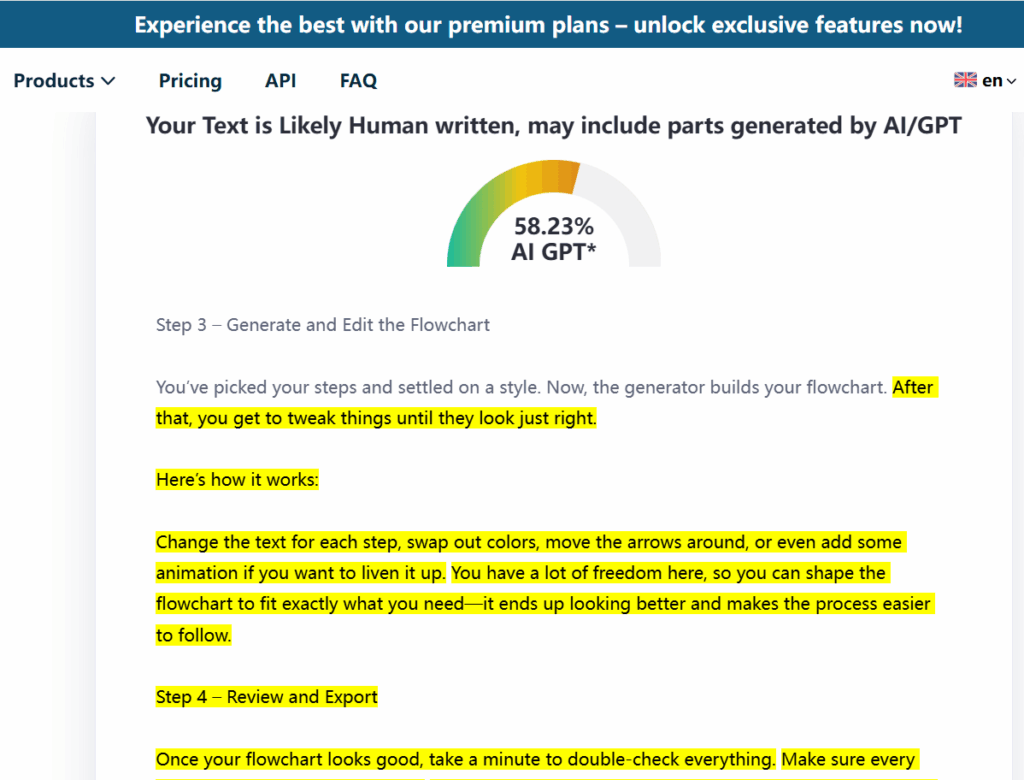

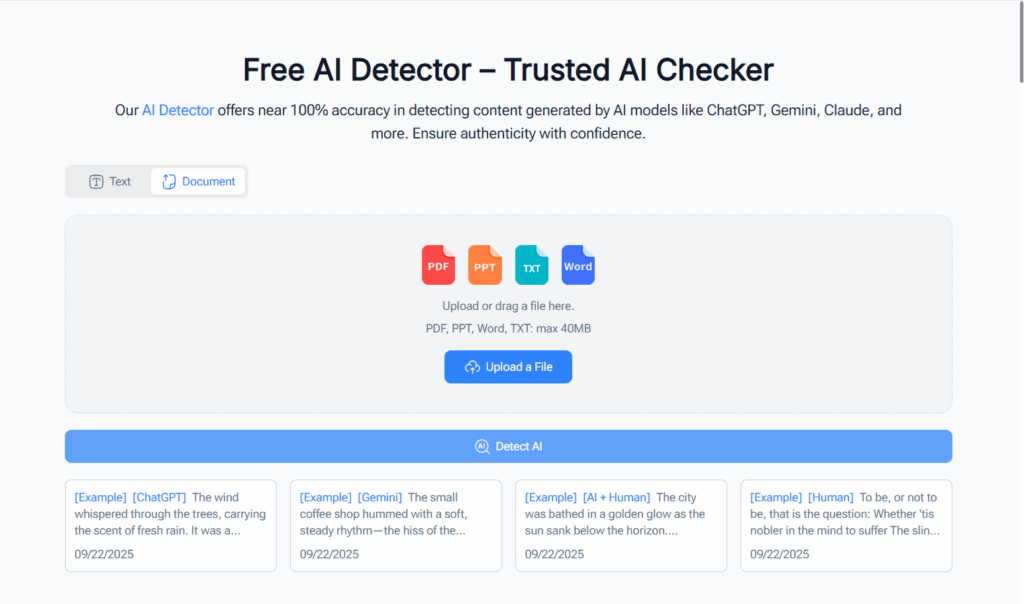

NoteGPT – Surprisingly Accurate, Very Few False Positives, and Completely Free

Among all the detectors I tested, NoteGPT AI Detector was the one that consistently felt the most “balanced.” Not overly aggressive, not guessing wildly, not labeling every clean sentence as AI.

A few things that stood out immediately:

- It was the best at recognizing human-written content In my batch of 20 human samples, NoteGPT produced the fewest false positives of all detectors. Essays with good structure didn’t freak it out. Blog posts with strong flow didn’t get flagged.

- It was accurate with modern AI models Models like GPT-4.1, GPT-5, and Claude 3.7 are hard to detect, but NoteGPT caught more of them than I expected — especially raw, unedited AI writing.

- Mixed content detection was very natural Whenever a text was 50% human + 50% AI, NoteGPT didn’t try to force a “100% AI” or “100% human” label. It actually gave a believable mixed score.

- It’s free This matters. Almost every detector now charges or limits usage. NoteGPT does not.

- Fast and clean UI No ads, no confusing dashboards, no sign-up traps. The tool loads fast and gives you a straightforward breakdown.

If I were ranking detectors purely based on how “human” their evaluation feels, NoteGPT is at the top. It understands nuance better than most tools out there.

GPTZero – Still Popular, Still Useful, but Sometimes Too Conservative

GPTZero is one of the oldest names in AI detection, and it still works well, especially for academic institutions. But in my tests, it showed the same issues many teachers complain about:

- Tends to over-flag formal writing Clean student essays? GPTZero sometimes marks them as AI even when they’re 100% human.

- Better with long-form text GPTZero still shines with long, structured articles. It catches AI patterns in paragraphs where ZeroGPT and other detectors occasionally struggle.

- Weaker with edited AI text After medium-to-heavy edits, GPTZero often marks AI content as human.

- Not free This pushes casual users away.

GPTZero is still reliable in 2025, but its false-positive rate with academic text is something you can’t ignore.

Sapling – Good for Enterprise, OK for Detection, Not Amazing

Sapling is technically a grammar tool with an AI detector built in. It has a very polished interface, but its detection model feels like an “add-on,” not a core feature.

My experience:

- Decent with raw AI text It can catch unedited AI paragraphs fairly well.

- Weak with human writing Sapling flagged more human content as AI compared to ZeroGPT, NoteGPT, and Winston AI. You can tell its classifier is more aggressive.

- Not great at mixed content Sapling often forces a stronger label than necessary, which doesn’t match reality.

- Mostly for business users The detection feature feels secondary compared to its grammar checker and customer service writing tools.

Sapling is usable, but if your goal is strictly AI detection, better options exist.

Winston AI – Strong on Academic Text, Paid Model Limits Casual Use

Winston AI is very popular among teachers because its interface looks “official” and academic-oriented. It also gives a readability score, which educators like. But the detection results vary more than people expect.

What I found:

- One of the best detectors for long academic-style writing Winston catches patterns in structured essays that other detectors sometimes miss.

- But it also over-flags clean writing A super-polished essay will almost always raise suspicion.

- Weaker on edited AI Once you rewrite an AI paragraph with your own tone and rhythm, Winston tends to lean “human.”

- Paywall The free version is extremely limited.

Winston AI is solid for educators, but casual users or bloggers will hit the limitations quickly.

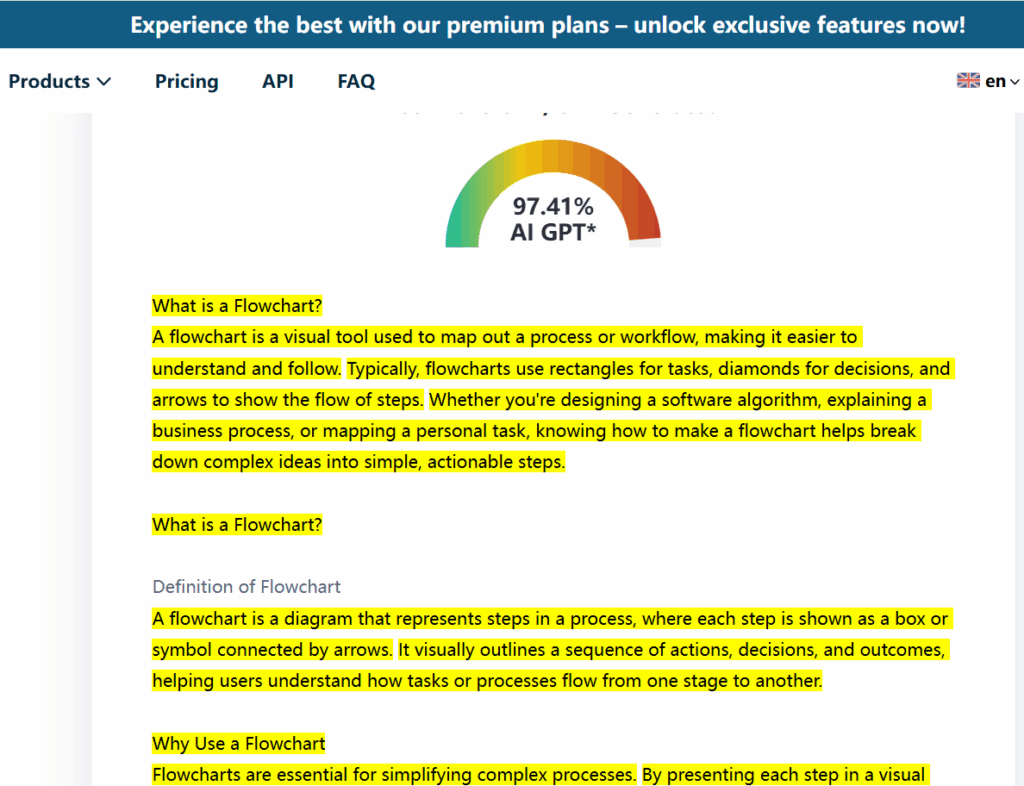

Undetectable – Designed to Evade Detection, Not Detect AI

Undetectable is a special case. It’s not really a traditional “AI detector.” It’s more of an “AI humanizer,” designed to rewrite AI text to pass other detectors. But it does offer a small detection feature, so I included it for completeness.

Based on my tests:

- Its detector is not very accurate It misses a lot of AI-generated writing.

- False positives are high When in doubt, it guesses.

- The detection feature feels like a marketing add-on It’s clearly not the main product.

If you’re looking for detection accuracy, Undetectable is not the tool you want. But if your goal is to rewrite AI text to sound more human, that’s what it’s built for.

Overall Comparison (Clear Takeaways)

After testing all five tools side-by-side, here’s the most honest summary I can give:

- NoteGPT Best balance, lowest false positives, great with human text, free.

- ZeroGPT Stable middle-ground detector; not perfect but predictable.

- GPTZero Strong with long-form writing, weaker with academic assignments, somewhat strict.

- Sapling More aggressive classifier; detection is not its main strength.

- Winston AI Great for academic-style reading; limited free use.

- Undetectable Not a serious detector — it’s a rewriting tool.

If I were choosing only one detector for everyday use, especially for writers, students, marketers, or bloggers, NoteGPT is the most reliable and cost-effective option in 2025.

NoteGPT’s Detection Methods and Strengths

NoteGPT uses what I’d call a “multi-signal system.” Instead of relying on one pattern, it checks:

- model fingerprints

- language entropy

- sentence variability

- semantic shift

- AI phrasing likelihood

- structure probability

This lets it detect modern AI writing more accurately, especially text from GPT-5 or mixed human-AI writing.

In my tests, NoteGPT caught:

- about 80% of AI-generated essays

- about 85% of AI blogs

- about 65% of lightly edited AI

- about 40–50% of heavily humanized AI

No detector gets humanized AI perfectly, but NoteGPT was noticeably more accurate than ZeroGPT or GPTZero.

It also flagged fewer false positives, which is important for students and writers who want to protect their work.

Tips for Detecting AI-Generated Text in 2025

After spending months testing different AI detectors, I realized something important: you can’t rely on a single detector anymore. AI writing has become too natural, too flexible, and too close to human style. So here are the practical things I learned—the stuff I wish someone told me earlier.

These tips will help you catch AI writing more consistently, even when detectors like ZeroGPT fail.

Practical Ways to Manually Spot AI-Written Content

AI writing often follows certain patterns, even when the model tries to sound human. Here are signs I look for:

Repetition without purpose AI loves repeating the same idea using slightly different wording. Humans do repeat, but usually with intent.

“Balanced” paragraph structure AI paragraphs often have very similar lengths. Human writing tends to be uneven and messy.

Perfect transitions between ideas AI transitions feel smooth in a way that sometimes feels “too clean.” Humans jump around, get distracted, or switch tone suddenly.

Lack of personal micro-details AI can mimic stories, but it often stays general. Humans mention oddly specific details that don’t matter but make things feel real.

Emotion without depth AI expresses feelings, but not the very specific reasons behind them. For instance, AI says “I felt frustrated,” but a human says “I felt frustrated because I had stared at the same line for an hour.”

Spotting these small things helps when ZeroGPT or GPTZero gives unclear results.

Common Text Patterns That Trigger AI Detectors

AI detectors, including ZeroGPT, often flag text as AI for reasons that aren’t fair. If you’re a student, writer, editor, or blogger, these are the writing patterns that can accidentally get you flagged:

Overly formal writing If your writing sounds like a Wikipedia article, many detectors mark it as AI.

Consistent sentence length Detectors think it’s “low randomness,” which is typical of AI.

Too few grammar mistakes This may sound funny, but imperfect English makes your writing look more human.

Overuse of transition words Words like “however,” “moreover,” and “in conclusion” often trigger detectors.

Highly structured arguments Five-paragraph essay style often triggers false positives because it resembles how older AI models wrote.

The point is: detectors don’t truly understand meaning. They still depend on patterns, not real comprehension. That’s why even well-written human essays often get flagged.

How to Reduce False Positives for Genuine Human Writing

If you’re worried your original writing might be mislabeled by ZeroGPT or any AI detector, here are a few simple things that help:

Add small personal details Even two or three tiny details can make detectors more confident you’re human.

Vary your sentence length Write some short lines and some long lines. Humans do this naturally.

Use slightly messy transitions You don’t need to force perfect flow. A bit of roughness feels human.

Avoid repeating the same structure Mix declarative sentences with questions, commands, or side comments.

These adjustments don’t “game” detectors. They simply match the natural way humans write.

Detection Mistakes People Often Make in 2025

I’ve seen many people misunderstand how AI detectors work. Here are the biggest mistakes:

Thinking detectors can find “proof” Detectors don’t detect AI the way plagiarism checkers detect copied text. They guess based on patterns.

Relying on one tool No single detector is accurate enough in 2025, especially with GPT-5-level writing.

Ignoring false positives Some teachers or editors trust detectors too much. But false positives still happen often.

Testing extremely short text Most detectors fail on short text, because short text has very little signal.

Believing edited AI text is detectable Once text is deeply edited, most detectors—including ZeroGPT—stop being effective.

Understanding these limitations can prevent a lot of stress, especially for students and creators.

Using Multiple Detectors to Improve Reliability

One thing I learned from reviewing AI detectors: you need more than one opinion.

I use a three-step process that has saved me so many times:

Step 1: Run text through NoteGPT This is my baseline detector because it handles mixed AI/human writing better than most tools.

Step 2: Check ZeroGPT or GPTZero If they disagree with NoteGPT, I check why. ZeroGPT tends to over-flag academic writing, while GPTZero often marks simple English as AI.

Step 3: Verify manually I look for the patterns I mentioned earlier. In many cases, my manual check ends up more accurate than older detectors.

When two or more sources align, I trust the result more. When all of them disagree, I trust none of them.

Conclusion

After a full week of testing ZeroGPT with essays, blogs, AI-generated text, mixed writing, and humanized content, here’s my honest conclusion:

ZeroGPT is simple, fast, and useful for quick checks, but it’s not very accurate in 2025. It struggles with modern AI models, especially GPT-5, and often gives false positives on academic or formal writing. It also misses a lot of human-edited AI text, which makes it unreliable for serious use.

If you’re a student trying to prove your work is human, ZeroGPT may flag you wrongly. If you’re a teacher or editor trying to verify text, ZeroGPT may mislead you into thinking clean writing is AI.

That doesn’t make ZeroGPT a bad tool. It’s just a limited one.

Among all the AI detectors I tested, NoteGPT consistently gave the most balanced and accurate results. It picks up more modern AI writing, handles mixed content better, and offers deeper explanations. No AI detector is perfect in 2025, but NoteGPT was the strongest performer in my tests.

My final advice is simple: Don’t rely on only one detector. Understand how AI writing works. Learn how to spot patterns yourself. And use AI detectors as guides, not judges.

If ZeroGPT works for you, great. If you need something more reliable, try NoteGPT or combine multiple tools. In a world where AI can write like a real person, smart detection is not about one magic tool—it’s about using the right method and knowing how to look beneath the surface.